AI in charities: what’s happening right now (March 2025)

Real world use cases, opportunities and challenges

The charity sector is always looking for ways to work smarter and stay ahead of the curve. Artificial intelligence (AI) tools like ChatGPT, Claude or CoPilot are rapidly becoming part of that conversation.

At our recent AI Community of Practice, we brought together digital leaders and practitioners from UK charities to explore how AI is actually being used, the barriers to adoption, and the ethical considerations that come with it.

Nobody has yet found a game changing use case. As ever, resources are stretched with AI not falling under anybody’s specific remit to drive forward. However there is excitement and small wins are beginning to be noticed.

I wonder if real change will come from a new charity that sets out AI-first and is able to talk about lowering costs. Once someone says “we use AI extensively, and our admin costs are under 3% as a result” that will be hard for established charities to ignore.

James Heywood, Policy & Practice Platform Manager, Oxfam GB

Here are some of the key takeaways from the session and other conversations.

Key takeaways

1. Deciding what AI to use (and what to avoid)

Charities are starting to get more structured in how they assess AI tools before jumping in. One organisation has set up a dedicated AI board of senior leaders and practitioners, to evaluate tools based on factors like:

mission alignment

bias

privacy

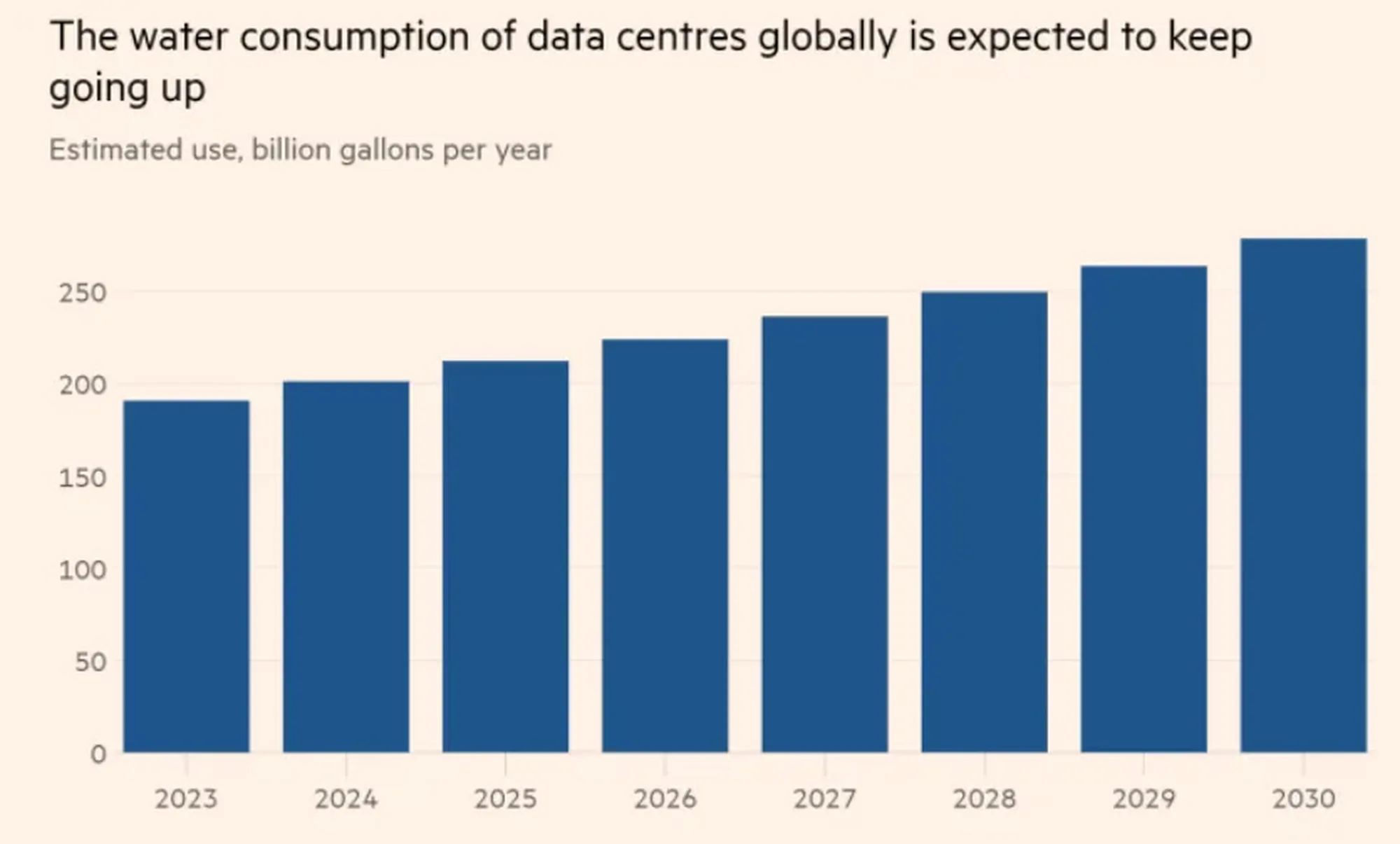

sustainability

Having a clear framework helps ensure AI adoption is intentional, not just reactive.

A solid risk assessment process is key. Without it, charities might end up using tools that store or share sensitive data in ways they wouldn’t expect or be comfortable with.

2. AI for auditing organisational knowledge

One charity found AI particularly useful for auditing and managing knowledge across the organisation. By using AI to scan documents and identify key themes, they streamlined the process of spotting gaps in their knowledge base. This is especially useful for teams working in policy-heavy areas or relying on institutional memory.

3. Balancing internal and external AI policies

For organisations working in human rights, AI presents both opportunities and challenges. One charity shared how they’re navigating the tension between using AI internally to boost efficiency while campaigning externally against AI-driven human rights abuses. Their approach is a clear internal AI policy that aligns with their values and ethical stance.

4. Chatbots on charity websites are useful but still a work in progress

More charities are experimenting with AI-powered chatbots to provide instant responses to website visitors. They can be great for handling frequently asked questions, freeing up staff for more complex queries. But concerns remain about accuracy and tone, meaning adoption is still cautious.

5. AI and content security risks

One surprising issue raised was around confidentiality. If you use AI tools like ChatGPT to edit or proofread a document without adjusting privacy settings, you might inadvertently expose embargoed or confidential information. Some AI tools retain input data to improve their models, which means sensitive charity content could then appear elsewhere.

Once AI models reference your data, removing it is much harder than just deleting a webpage. This raises big questions about data control and ownership.

6. Ethical AI procurement and its social impact

Some charities are beginning to scrutinise how AI tools are trained and powered, particularly when working with agencies. They’re asking suppliers tough questions about AI’s environmental footprint and ethical implications before bringing new tools on board. It’s still early days, but awareness is growing.

7. Still searching for strong AI use cases

Despite all the AI hype, many charities are struggling to find truly impactful use cases. While the potential is clear, many leaders want more concrete examples of AI making a real difference before they commit resources. Some have experimented with AI but found the results underwhelming, reinforcing the need for more proven case studies.

8. AI adoption hindered by capacity issues

One of the biggest challenges is that AI is not anyone’s full-time job. Digital teams in charities are already overstretched, and while there’s interest in AI, finding the time to explore and implement it is tough. Without dedicated roles or training, adoption will be slow.

9. Interest is high, funding is low

According to the recent charity digital skills report, charities are keen to experiment with AI, but funding remains a major roadblock. Many want to explore AI tools but lack the budget to invest in them or upskill their teams. This highlights the need for low-cost, accessible AI solutions built for the sector.

Final thoughts

AI is already making a difference in charities, but adoption is uneven. Some organisations are finding practical applications, while others are still figuring out where AI fits in. Strong governance, clear policies, and a focus on real-world impact rather than just following trends will be key to making AI work for the sector.

We’ll keep tracking developments and sharing what’s working – and what’s not – as charities continue to explore AI’s role in their work.

Let us know which of these use cases you’re interested in